Bayesian

It is quite hard to withstand the reasoning of Jaynes (it is a pity he couldn’t finish his book) in “Probability Theory”. In chapter 5 Jaynes comes with a beautiful example that shows that exactly the same information can lead to totally different conclusions. It is very illustrative considering religious, ethical, or political views. I can only do justice by using an extended quote (forgive me!):

The new information is ‘Mr has gone on TV with a sensational claim that a commonly used drug is unsafe’ and three viewers, Mr , Mr , and Mr , see this. Their prior probabilities that the drug is safe are , respectively; i.e. initially Mr and Mr were believers in the safety of the drug, Mr a disbeliever. But they interpret the information D very differently, because they have different views about the reliability of Mr . They all agree that, if the drug had really been proved unsafe, Mr would be right there shouting it; that is, their probabilities are ; but Mr trusts his honesty while Mr does not. Their probabilities that, if the drug is safe, Mr would say that it is unsafe, are , respectively.

Applying Bayes theorem […] we find their posterior probabilities that the drug is safe to be , respectively. Put verbally, they have reasoned as follows:

Mr : ‘Mr is a fine fellow, doing a notable public service. I had thought the drug to be safe from other evidence, but he would not knowingly misrepresent the facts; therefore hearing his report leads me to change my mind and think that the drug is unsafe after all. My belief in safety is lowered […] so I will not buy any more’

Mr : ‘Mr is an erratic fellow, inclined to accept adverse evidence too quickly. I was already convicned that the drug is unsafe; but even if it is safe he might be carried away into saying otherwise. So, hearing his claim does strengthen my opinion, but only [a bit]. I would never under any circumstances use the drug.’

Mr : ‘Mr is an unscrupulous rascal, who does everything in his power to stir up trouble by sensational publicity. The drug is probably safe, but he would almost certainly claim it is unsafe whatever the facts. So hearing his claim has proactically no effect on my confidence that the drug is safe. I will continue to buy it and use it.’

Nonparametric Bayesian

This Bayesian prelude makes it clear that the structure of the prior is very important. Very interesting problems can be solved if the prior gets sufficient structure. A nice workshop has been held at Como, Italy, on Applied Bayesian Nonparametrics, from the Applied Bayesian Statistics School, ABS14. The lecturers were Michael Jordan and Francois Caron who talked about Dirichlet processes and Beta processes, respectively. If you don’t know this Michael Jordan, you might instead have heard one time or another from one of his students, under which e.g. Yoshua Bengio, Tommi Jaakkola, Andrew Ng, Emanuel Todorov, and Daniel Wolpert. But, enough small talk, let’s face the truth.

The Chinese Restaurant Process

It sounds a bit childish, but the Chinese Restaurant Process is an actual stochastic process with great expressive power. Imagine a restaurant with a seamingly infinite number of tables. The first customer enters the restaurant and picks the first table. A second customer enters the restaurant and sits with the first customer with probability and at a new table with probability . Here is a so-called concentration parameter which causes more or less tables to occupied with respect to the number of customers. For example, gives a probability of opening a new table for the second person equal to . The peculiarity of this process is that if you check the probability of a random two people, say customer and , the probability they sit at the same table is also exactly that, a ! The number of people sitting at the same table as the first customer is on average the same number of people sitting at the same table as the last customer! These properties have to do with the fact that this is an exchangeable process. You can hone your intuition with the assignments from Francois if you like.

It is proven by de Finetti that a process with exchangeable observations can be written in such way that there are underlying hidden (latent) variables that are i.i.d. (independent and identically distributed) according to some unknown distribution. It is interesting (and not trivial) that such a distribution can be found! The distribution that corresponds to the Chinese Restaurant Process is one of the most used processes in nonparametric Bayesian methods, namely the Dirichlet process.

Hierarchy

Why are these stochastic processes so interesting? With a Dirichlet process it is possible to model a generative procedure in which data generates clusters ad infinitum. New data can always need to new clusters. These kind of mechanisms might exist in other machine learning methods. For example, in ART new category nodes are added during the learning process. However, nonparametric Bayesian hierarchies is the first method that describes how to this in a full Bayesian setting. This means no adhoceries. The inference can be done over the structure of the model and the parameters of the model simultaneously. In other words, the model reasons over the parameter per table, as well as the number of tables at the same time.

Examples

To explain how inference proceeds is something for another blog post (check the code). It is more interesting for now to see how these methods have been applied already.

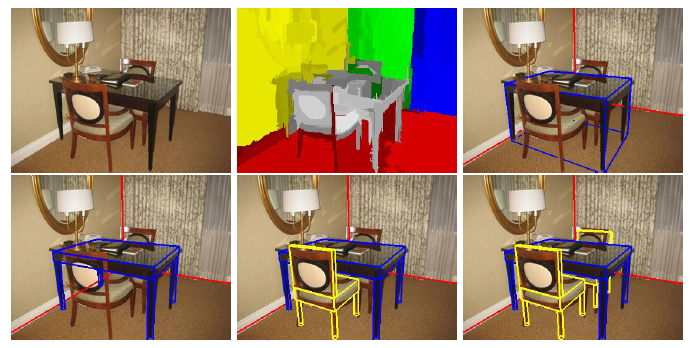

An illustrative example is from Del Pero et al. in Understanding bayesian rooms using composite 3d object models:

`

`

One of the chairs you can see very well, but the other is occluded by the table. To perform inference over this structure requires a presentation of a chair and the ability of reasoning over multiple of those composed objects. Only then it becomes feasible to infer the full chair behind this table.

Another example is speaker diarization. Imagine an organized meeting, there are people talking, supposedly not at the same time, but sequentially. How do we perform inference on the number of speakers present and how can we build an inference engine that benefits from Bob talking at time , and him talking at time ? The system should all the time improve from Bob talking!

This can be solved by a so-called sticky Hierarchical Dirichlet Process defined on a very well-known model structure, a Hidden Markov Model (see A sticky HDP-HMM with application to speaker diarization). A Hidden Markov Model, is built on a Markovian assumption, hence its name. This means that it is impossible to store long-term dependencies as required by this application: a person talking at the beginning and the end of a conversation. Remarkably is that this model actually performed on par with state-of-the-art algorithms that were very specific to this application!

Slides

The problems you will encounter by applying nonparametric Bayesian methods in the real world are manifold. Foremost, the sampling methods to approximate a full Bayesian method, are not yet adapted to these hierarchical schemes. Although it might seem that having an abstraction of say, a chair, would allow you to move around, copy, and delete this entire chair through an inference process, these kind of abstraction handling have not been handed to the sampling algorithms themselves. All approximation methods, be it variational methods, or sampling methods, such as slice sampling or Gibbs sampling, are suddenly quite non-Bayesian in nature! To incorporate a prior (such as a hierarchical structure) in the sampling procedure itself, I have yet to see it. The methods are general, in the sense, that the sampling is able to sample any probability density function and is not able to take advantage of prior knowledge about this function (except for approximating a Bayesian formulation that entails this structure).

You can find my presentation with my questions about how to apply nonparametric Bayesian methodology to simple line estimation as in RANSAC or Hough here.

blog comments powered by Disqus